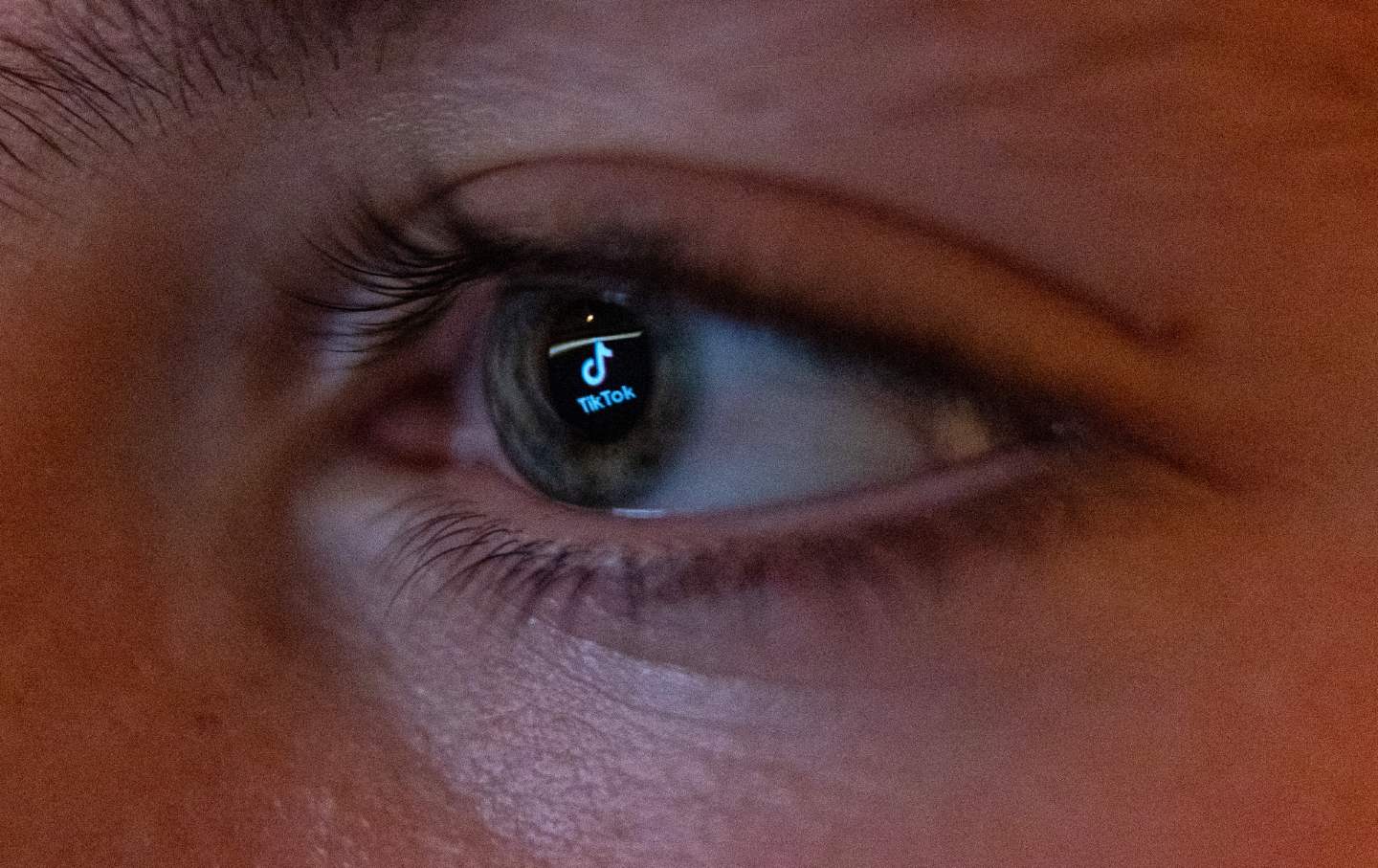

“In Techno Parentis”: Who Should Regulate the Online Lives of Teenagers?

With TikTok, Instagram, and other platforms using algorithms to send teen viewers addictive, dangerous content—and reaping immense profits—self-regulation has clearly failed.

When “Josie” was a 12-year-old fifth-grader, passionate about sports, her parents gave her a smartphone. She immediately started searching for sports-related content on TikTok. But TikTok’s social media feed is targeted based on a user’s data profile: It started sending “Josie”—as a minor we have changed her name to protect her privacy—disordered-eating content, and videos about how to be anorexic. The content engaged her and kept her on the app; it also led her to isolation from friends, family, and sports. Just after she turned 13, she was hospitalized with severe malnutrition, and almost died. She had to spend 16 days in intensive care.

Josie’s story is not unique. The same thing happened to millions of kids: The social media company profiled her, identified the content that would be most likely to keep her online, and served it to her. Research from the Center for Countering Digital Hate found that the recommendation algorithm would suggest eating-disorder and self-harm content to new teen accounts on TikTok within minutes of their signing up to the website. One account saw suicide content within 2.6 minutes; another saw eating-disorder content within eight minutes; and while it did not show up on most accounts so quickly, and not all the content offered was eating-disorder-themed, the same premise holds: Platforms are targeting children with content that addicts them.

There has been, as Jonathan Haidt argues persuasively in his recent book, The Anxious Generation, a sharp spike in teen depression, anxiety, and mental health disorders since 2010 that is only explainable by the widespread adoption of the smartphone. Both boys and girls have been impacted, although the impact on girls is more direct and pronounced. A CDC survey from last year showed that a third of teen girls had seriously considered suicide—a nearly threefold increase from 2011. Most American teen girls (57 percent) now report that they experience persistent sadness or hopelessness, a significant increase from 36 percent in 2011.

This is not simply a straightforward health crisis—although it is certainly that—because there are impacts like loneliness, and discomfort with risk-taking, that ripple beyond measurable suffering into reshaping what it means to be an adult, and a citizen in a democratic society. As Haidt points out, moreover, social media is not like smoking or drunk driving. The cause of the mental health crisis is both direct (more time online does measurably decrease well-being) and indirect (the more time your friends spend online, the less opportunity there is for any given teenager to build meaningful offline relationships).

Dozens of states have now taken up this issue. The policy approaches fall into four different buckets.

First, there are laws that attempt to put platforms in a quasi-parental, or fiduciary relationship to children, by requiring TikTok, Instagram, and Youtube to take on a “duty of care” to minors. This approach, which was first adopted in the United Kingdom in 2021, arises in part from the horrifying stories told by whistleblowers. Former Facebook employees Frances Haugen and Arturo Béjar both described repeated efforts to get Meta corporate management to respond to the company’s own data on teenage harm with increased safety measures. A duty of care would force companies to put children’s welfare ahead of simply maximizing profits, and empower engineers in their conflicts with top management. It is unclear how far a duty of care would extend, but at least it would be something—a binding legal mandate to consider other interests than the bottom line.

Second, and increasingly popular, is the kind of law I worked on while senior counsel for economic justice for New York State Attorney General Letitia James: banning particular functions. Her bill, sponsored by state Senator Andrew Gounardes and Assemblywoman Nily Rozic, is currently embroiled in the New York legislature’s budget negotiations. Governor Kathy Hochul strongly supports the law, but Big Tech is currently spending hand over fist to get it removed from the budget.

The bill would prohibit social media platforms from using “addictive feeds” without parental consent. Addictive feeds are the algorithms that unilaterally select what content children see, pushing what is likely to keep them online, based on their intimate data. It puts teenagers back in charge of organizing their own pages. The bill also includes a provision that would put limits on notifications (banning them at night, for example) and the monetization of children’s data. Other kinds of “function-based” approaches include bans on using any kind of data about geolocation, and could also extend to banning specific technical features, like infinite scroll, or the “speed filter” that tells children how fast they are moving (a function that led to several teenage deaths and a significant lawsuit). The Florida age-limited bill, passed last week, is a form of a function-based ban: It limits all social media functions for under-14-year-olds.

Third, there is the parental empowerment approach, which effectively provides technical enhancements to the legal status quo. These laws range from requiring platforms to give parents access to children’s social media accounts upon request to tools giving parents the ability to limit the number of hours children are online, or requiring parental consent before any social media account can be created for a minor.

Finally, there is the approach advocated by the Big Tech organizations, the ACLU, and some civil liberties organizations: self-regulation. For these organizations, any kind of law that limits what platforms can do in ordering content will necessarily regulate speech. The Electronic Frontier Foundation, for instance, argues that minors have a First Amendment right to access social media. It recently filed an amicus brief in support of Snapchat, arguing that Snapchat should be immune from lawsuits from parents of children who overdosed on fentanyl supplied by Snapchat-using drug traffickers.

Of all the approaches, the most dangerous is the last, the status quo. Right now, Big Tech companies often spend more time with children than their parents or schools do. The virtual spaces they control are not like playgrounds, parks, or libraries; Instagram is not providing a neutral space for play and exploration but actively engineering its users’ emotions, their connections, their choices, by selecting what they see when and how often. And no amount of social pressure will change the companies’ focus on the bottom line.

Our laws and society are very confused about teenagers—a social category invented in the postwar period with the spread of the car, but that has grown into something different. Are they little adults, big children? Their cognitive capacity is as capable as (if not more capable than) legal adults’, but their brains are in the most active social developmental period, and of all entities to turn their development over to, it would be hard to come up with an institution less well-suited to nurturing the development of adults in a democratic society than TikTok, Youtube, or Instagram.

Popular

“swipe left below to view more authors”Swipe →Finally we need to confront the peculiar way in which the focus on the phones has been coded as more conservative—if not outright Republican—and thereforenot something that progressives and leftists should care about. Some of this comes from the libertarian streak within civil liberties organizations like the ACLU or the Electronic Frontier Foundation, who have been consistently skeptical of government regulation—even when it is government regulation of big, monopolistic tech companies. Some may come from the tribal tendencies and the old entanglement of Google with the Obama administration—a sense that while Google and other Big Tech may behave in problematic ways, they share our progressive values.

Whatever the reasons, this myth doesn’t bear much relation to reality. Progressives, at the vanguard of protecting children from dangerous industrial labor conditions, should also be at the vanguard of protecting children from having their most intimate data exploited for profit. The end game of Big Tech and children is radical isolation and addiction—the very opposite of the solidarity that defines a thriving open, democratic system.

Hold the powerful to account by supporting The Nation

The chaos and cruelty of the Trump administration reaches new lows each week.

Trump’s catastrophic “Liberation Day” has wreaked havoc on the world economy and set up yet another constitutional crisis at home. Plainclothes officers continue to abduct university students off the streets. So-called “enemy aliens” are flown abroad to a mega prison against the orders of the courts. And Signalgate promises to be the first of many incompetence scandals that expose the brutal violence at the core of the American empire.

At a time when elite universities, powerful law firms, and influential media outlets are capitulating to Trump’s intimidation, The Nation is more determined than ever before to hold the powerful to account.

In just the last month, we’ve published reporting on how Trump outsources his mass deportation agenda to other countries, exposed the administration’s appeal to obscure laws to carry out its repressive agenda, and amplified the voices of brave student activists targeted by universities.

We also continue to tell the stories of those who fight back against Trump and Musk, whether on the streets in growing protest movements, in town halls across the country, or in critical state elections—like Wisconsin’s recent state Supreme Court race—that provide a model for resisting Trumpism and prove that Musk can’t buy our democracy.

This is the journalism that matters in 2025. But we can’t do this without you. As a reader-supported publication, we rely on the support of generous donors. Please, help make our essential independent journalism possible with a donation today.

In solidarity,

The Editors

The Nation