Print Magazine

October 20, 2014 Issue

Cover art by: illustration by Victor Juhasz

Purchase Current Issue or Login to Download the PDF of this Issue Download the PDF of this Issue

Editorial

7 GOP Governors Who May Lose Re-Election

Their extremist policies have made them so vulnerable that corporate America is scrambling to save them.

Column

A Tale of One City by David Brooks

For one-percenters like the Times columnist, city life has never been better.

Run, Karen, Run!

Chicago Teachers Union leader Karen Lewis is eyeing Mayor Emanuel’s job.

Letters

Books & the Arts

A Theater Without Qualities

Immersive theater has no real style—except to fetishize its look.

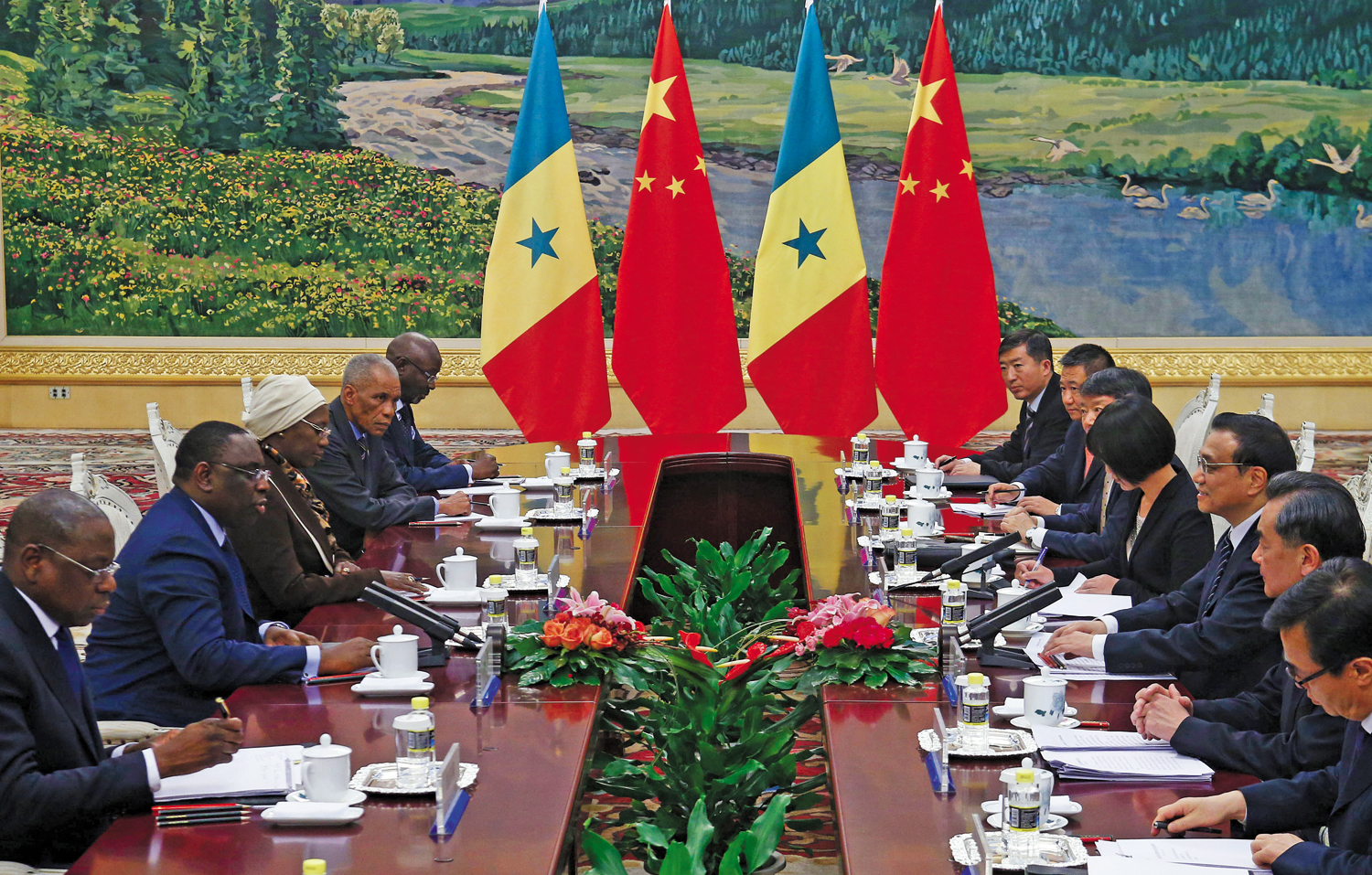

China’s New Frontiers

How Africa and China’s own borderlands became the center of Beijing’s new empire.

Poetry and Catastrophe

By privileging historical catastrophe, a new poetry anthology narrows the definition of art.

Extinction Pop

Choreographed for maximum appeal, pop culture likes to say yes. History is made by those who say no.